The world of Artificial Intelligence just got a fascinating upgrade. The company DeepSeek has launched its latest model, DeepSeek-V3.1, and it comes with a game-changing feature: it can switch between two different modes of “thinking.”

Think of it as having two brains in one. One is for quick, everyday chats, and the other is for deep, complex problem-solving. This new model is not just a minor update; it’s what DeepSeek calls its “first step toward the agent era”—an era where AI doesn’t just talk to you, but actively helps you get things done.

Let’s break down what this means for you in simple terms.

The Biggest New Feature: “Think” and “Non-Think” Modes

Imagine you’re asked two questions:

- “What is 2+2?”

- “What’s the best financial plan for me to retire in 20 years, considering inflation and my current savings?”

For the first question, your brain answers almost instantly. You don’t need to “think” hard; the answer is just there. This is like DeepSeek-V3.1’s “Non-Think” mode. It’s designed for fast, straightforward conversations and simple queries. It gives you quick answers without using a lot of processing power.

For the second question, you’d need to pause, gather information, do some calculations, and reason through different scenarios. This is deep thinking. This is exactly what DeepSeek-V3.1’s “Think” mode does. When faced with a complex problem, you can switch it to “Think” mode, and it will engage a more powerful part of its digital brain to analyze, reason, and perform multiple steps to find a solution.

This “hybrid” approach is revolutionary because it makes the AI much more efficient. It doesn’t waste energy on simple questions but can ramp up its power when needed, just like a person would.

What Else Makes DeepSeek-V3.1 So Special?

Beyond its dual-thinking modes, this new version comes with a host of improvements that make it smarter and more capable.

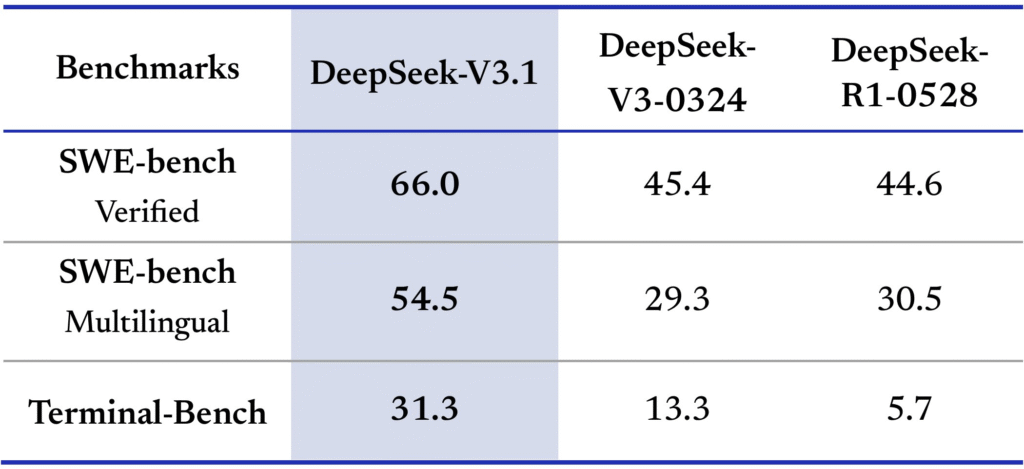

1. It’s a Better Problem-Solver (A True “Agent”)

When tech people talk about an “AI Agent,” they mean an AI that can do things, not just chat. For example, it could book a flight for you, which involves searching for flights, comparing prices, and filling out forms.

DeepSeek-V3.1 is much better at these multi-step tasks. It has been specifically trained to use digital “tools” (like a search engine or a calculator) to complete complex jobs. It’s also much better at difficult tasks like writing computer code and navigating complex command-line interfaces.

2. It Has a Huge Memory

The new model has a “128K context window.” In simple terms, “context” is the AI’s short-term memory. A 128K context window means it can remember and process an amount of text equivalent to a 250-page book in a single conversation. This allows it to handle very long documents, recall details from earlier in the chat, and tackle much more complex projects without forgetting what you’re talking about.

3. It’s More Accessible for Developers

For the tech-savvy folks who build apps, DeepSeek has made V3.1 easier to work with:

- Open-Source: They’ve released the base model for free, so developers and researchers around the world can use and build upon it.

- Easier Integration: It now supports popular API formats (like Anthropic’s) and has a feature called “Strict Function Calling.” This simply means developers can more reliably tell the AI to use a specific tool (e.g., “use the calculator tool to find the sum”) and be confident it will do exactly that.

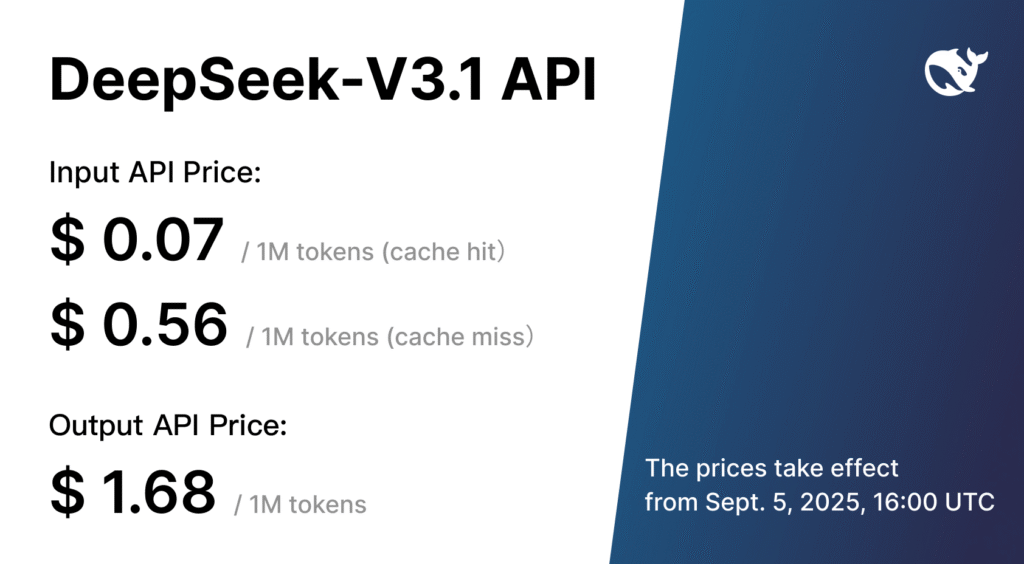

A New, Clearer Pricing Plan

With this new model comes a new pricing structure for developers who use DeepSeek’s service (the API). Here’s a simple guide to understanding it:

First, you need to know what a “token” is. A token is a piece of a word. For example, the word “apple” is one token, but “unforgettable” might be broken into “un,” “forget,” and “able” (3 tokens). AI pricing is based on how many tokens it processes.

The new pricing, effective September 5th, 2025, is broken down into two parts:

- Input Price (What you send to the AI):

- $0.56 per 1 million tokens: This is the standard rate for the text you ask the AI to process.

- $0.07 per 1 million tokens (cache hit): This is a huge discount! A “cache” is a temporary memory. If you send the AI information it has just seen recently, it’s a “cache hit,” and you get charged a fraction of the price.

- Output Price (What the AI sends back to you):

- $1.68 per 1 million tokens: The answer the AI generates costs more because this is where the real “thinking” and work happens.

This pricing is very competitive and encourages efficient use of the AI.

The Big Picture

DeepSeek-V3.1 isn’t just another chatbot. It’s a powerful tool designed to be a true digital assistant. By giving it the ability to switch between fast and deep thinking, DeepSeek has created a more efficient, capable, and versatile AI. It’s a significant step closer to a future where AI agents seamlessly help us with complex tasks in our daily and professional lives.